The Rise of Data Centers: What Powers AI, Profits, and Pollution

Data centers are becoming increasingly popular. This is partly due to the evolution of technology and the rising demand for data that comes with it. Ever wondered how Amazon knows what products to recommend, or how Spotify knows the perfect songs for you that just hit different? That’s machine learning at work. And what powers these machine learning models? Data.

Take Meta Platforms (AKA Facebook).

WSJ Article

There are rumors that Meta is in advanced talks to invest around $14 billion into Scale AI and bring on the startup’s CEO, Alexandr Wang, to help lead its AI efforts. Scale AI isn’t building its own language models from scratch. Instead, it’s a data-labeling company managing over 100,000 contractors globally; people who label images, write sentences, and generate the kind of content that helps AI models learn how to think and communicate.

And that’s just one example of data collection. Think about the scale of this operation: 100,000 people labeling data. You can only imagine how much data is being generated from this one company.

Data helps companies learn about consumers like you: how you interact with websites, your preferences, your purchases. This allows them to personalize your experience and keep you on their platform longer, ultimately so they can profit. Sometimes you pay with money, but often, you’re paying with your time and attention.

Let’s take social media. It’s free, right? So how do platforms like YouTube, Twitter, and Instagram make money? Advertising. But not just any advertising—targeted ads. These platforms don’t want to waste time showing ads to people who won’t buy. So, how do they know who’s a good fit? Machine learning.

By analyzing your watch history, likes, clicks, and searches, platforms build a digital profile of you. That profile helps advertisers reach the “right” people. And while not every ad will hit, with enough quality data, these models get scary accurate.

Companies may also sell your data to third parties who then use it to learn more about you. Turning that knowledge into profit. This is why you’ve probably heard the phrase: “data is the new oil”.

Companies like Amazon, Google, and Microsoft are investing billions into data centers.

McKinsey reports that by 2030, global data centers will require $6.7 trillion to keep up with the demand for compute power. That’s not loose change. These companies believe this infrastructure is essential—and they’re right. (Noffsinger et al., 2025)

Every digital footprint you leave behind from what you search to even what you add to your digital shopping cart builds your profile. If you’ve ever used the internet, you probably have a digital identity floating around out there, made of data points that algorithms use to categorize you. Be careful out there. The internet is the digital Wild West.

Now let’s talk about where that data lives.

Not a Cloud, But a Warehouse

The "cloud" isn’t a literal cloud. It’s a network of computers in remote locations called data centers.

This is Roger, a Google employee, taking care of the infrastructure in Google’s Mayes County, Oklahoma, data center. This is what the cloud is.

These data centers store and process all that information. Companies connect to these centers through various forms of wiring, and when speed is critical, they use optical fiber. This optical fiber is everywhere. Underground, both domestic and international, and on the ocean floor.

Optical fiber transmits data as light, making it incredibly fast which is especially important for technologies that require real-time decision-making, like self-driving cars.

A Quick Example: Edge AI + Cloud Coordination

Say a car five miles ahead of you detects black ice and uploads that info to the cloud. Your car, traveling at 65 mph, relies on a lightning-fast fiber-connected network to receive that alert in milliseconds. A delay in that signal? It could mean a crash. This combo of high-powered data centers and fiber infrastructure is what allows AI to perform in real-world situations.

Qualcomm & Alphawave: Speed Matters

WSJ Report

In the Wall Street Journal article mentioned above, Qualcomm recently bought Alphawave IP for $2.4 billion to strengthen its portfolio in AI, data centers, and storage. Alphawave specializes in high-speed, low-power connectivity. This huge price tag shows just how vital fast, efficient data transfer is to enabling AI and cloud infrastructure. (Walker & Chopping, 2025)

In simple terms, this technology allows Qualcomm to process information faster and this matters when it comes to data centers and AI.

Speed isn’t optional in today’s environment; it’s everything.

AI: Old School Origins, New School Momentum

AI may feel like a buzzword now, but it’s been around. So how did we get here? Back in 1997, IBM’s Deep Blue beat a chess grandmaster. In 2011, IBM’s Watson crushed Jeopardy champions (IBM Research, 2013).

Watch here

Impressive, but Watson went further by helping doctors diagnose illnesses.

IBM once reported that only 20% of the knowledge used by physicians to make decisions is evidence-based. That gap leads to errors: 1 in 5 diagnoses are incorrect or incomplete, and over a million medication errors happen in the U.S. each year. That’s where AI can help (IBM, 2013).

Since then, AI has grown into more user-friendly tools like LLMs (Large Language Models). According to IBM, we’re still in the Narrow AI stage which is defined as systems that perform a single task well. General AI and Super AI? Those are still theoretical for now.

But Apple’s recent research throws a monkey wrench in all this AI excitement.

Apple and the "Illusion of Thinking"

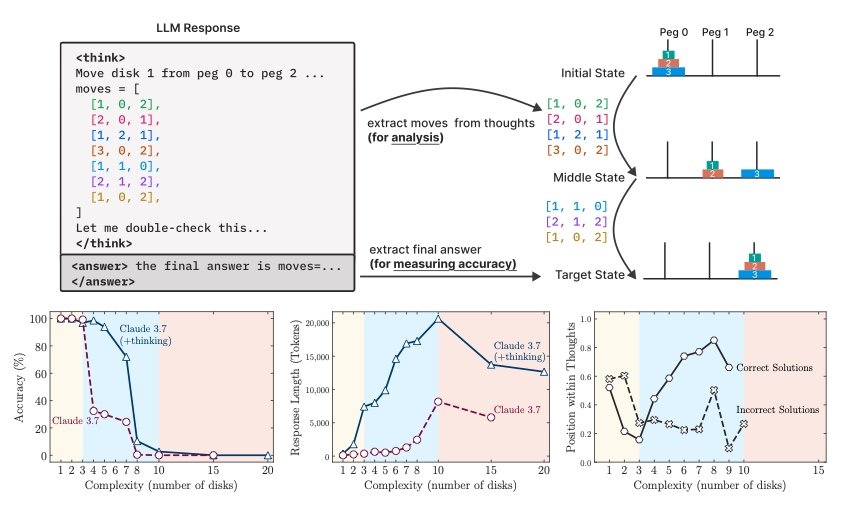

Apple’s report, “The Illusion of Thinking,” explores Large Reasoning Models (LRMs)—a cousin of LLMs—designed to solve more complex, human-like reasoning tasks.

Read the paper

So how did Apple test the capability of AI? Apple created custom puzzle environments to see how well these models "think." The findings? As problems get more complex, both LLMs and LRMs eventually collapse in performance. Their accuracy drops to zero (The illusion of thinking 2025).

One quote from the report sums it up:

"State-of-the-art LRMs still fail to develop generalizable problem-solving capabilities, with accuracy ultimately collapsing to zero beyond certain complexities..." (The illusion of thinking 2025).

That’s worth noting as we chase the idea of "thinking machines". However, Apple’s article alone won’t stop the AI investment and a major problem comes along with it.

The Power Problem

All this tech runs on power, and these data centers eat up a lot of it.

To deepen the perspective on energy usage and sustainability, I spoke with a tech professional who has experience working with mainframe systems and understands the evolving infrastructure behind data centers.

“Whether you like it or not, the AI revolution is happening. There will be challenges; socially, environmentally, politically but these are the necessary hurdles of progress,” said Jack Levi, a tech founder and experienced professional in mainframe systems and cloud infrastructure. “Data centers use ~5% of electricity now, but that’s expected to rise to 12% by 2028. That’s a lot of energy — and it puts serious demand on the grid.”

Levi makes a great point. The genie is out of the bottle at this point. AI will most likely become normalized in our society, and we need to solve for the energy consumption issue that comes with this technology; as companies grow so do their data collection efforts that power these AI tools.

The article Levi cited was from The U.S. Department of Energy (DOE) who recently announced the publication of the 2024 Report on U.S. Data Center Energy Use produced by Lawrence Berkeley National Laboratory which outlines the energy use of data centers from 2014 to 2028.

The report finds that data centers consumed about 4.4% of total U.S. electricity in 2023 and are expected to consume approximately 6.7 to 12% of total U.S. electricity by 2028 (Department of Energy, 2024).

Google, in its 2024 Environmental Report, acknowledged this issue and outlined steps like model optimization and infrastructure efficiency to reduce emissions.

They’re pushing for more renewable and nuclear sources, but there’s a catch: gains from renewables have been offset by declines in nuclear energy. And water usage is another issue—Google data centers used enough water in 2023 to irrigate 41 golf courses annually in the Southwest U.S. (Google 2024).

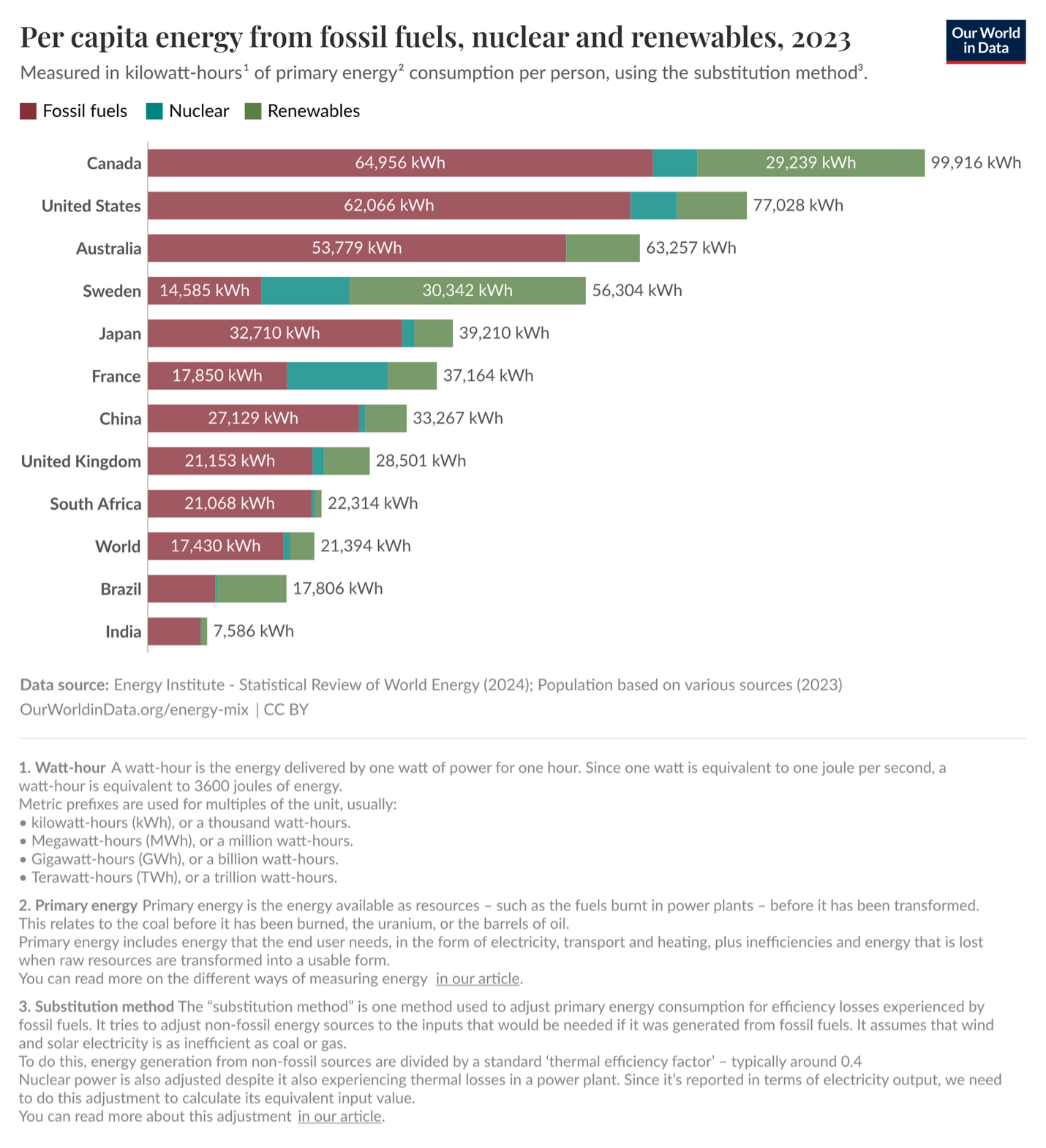

The shift is happening NOW in power. With Renewables and Nuclear increasing their market share in the energy comsumption conversation.

Who’s Powering Who?

The power problem is affecting leading AI companies.

OpenAI recently tapped Google Cloud for more compute capacity—even though Google is technically a rival (Cai & Hu, 2025).

Reuters Source

This shows how big the compute demand is becoming and Google isn’t afraid to take a piece of the pie. Originally, Microsoft was supplying OpenAI with this computer power. However, Microsoft alone isn’t enough anymore. OpenAI’s ChatGPT is growing rapidly.

But where does this power come from? Power starts at plants—coal, nuclear, hydro, wind, solar. Most data centers pull from the grid, but that grid has limits (Moore, 2025).

More on this

So, what are our options to solve this problem?

The obvious realistic choice in the long term is widespread nuclear. It's scalable, carbon neutral, and the safest form of electricity generation we have but societal acceptance is still a major barrier,” said Levi.

And many agree with Levi. McKinsey projects says that Nuclear will play a part in the future energy with gas, solar, and wind accompanying it. However, while Nuclear and hydrogen will help, they aren’t projected to grow much in the short term due to cost and political complexity.

Data centers are growing exponentially and continue to expand it’s market share of total global energy. That share is expected to grow over the next decade. As AI expands, so does energy usage.

So What Can We Do?

Google proposes investing in carbon offset programs and improving equipment efficiency. That’s a start—but not enough.

Business owners can take action by being aware of where your cloud services come from. Look into how sustainable your providers really are. Ask the hard questions. At the end of the day, business owners can only do so much with the infrastructure provided. The reality is our growing tech world needs better power options.

It will be interesting to see how the reality of AI and power play out as technology continues to evolve. The rise in LLMs like ChatGPT and Claude show there is a consumer market. Now, with AI agents added into the conversation, the workload continues to increase. Companies need the necessary infrastructure and power supply to support this AI boom. This will lead to more power being generated which will have a harmful effect on the planet if not dealt with correctly.

AI may be the future but there is no AI if there is no Earth.

Let’s make sure we don’t sacrifice one for the other.

Works Cited

Cai, K., & Hu, K. (2025, June 10). Exclusive: Openai taps google in unprecedented cloud deal despite AI rivalry, sources say | Reuters. https://www.reuters.com/business/retail-consumer/openai-taps-google-unprecedented-cloud-deal-despite-ai-rivalry-sources-say-2025-06-10/

Department of Energy. (2024, December 20). Doe releases new report evaluating increase in electricity demand from data centers. Energy.gov. https://www.energy.gov/articles/doe-releases-new-report-evaluating-increase-electricity-demand-data-centers

google. (2024). Google-2024-environmental-report.PDF. Environmental Report 2024G. https://www.gstatic.com/gumdrop/sustainability/google-2024-environmental-report.pdf

IBM. (n.d.). Watson is helping doctors fight cancer. https://web.archive.org/web/20131111173831/http://www-03.ibm.com/innovation/us/watson/watson_in_healthcare.shtml

IBM Research. (2013). Watson and the Jeopardy! Challenge. YouTube. https://www.youtube.com/watch?v=P18EdAKuC1U

Moore, T. (2025, June 3). Data Center Energy Consumption & Power Sources. Enconnex. https://blog.enconnex.com/data-center-energy-consumption-and-power-sources

Noffsinger, J., Patel, M., & Sachdeva, P. (2025, April 28). The cost of compute: A $7 trillion race to scale data centers. McKinsey & Company. https://www.mckinsey.com/industries/technology-media-and-telecommunications/our-insights/the-cost-of-compute-a-7-trillion-dollar-race-to-scale-data-centers

The illusion of thinking: Understanding the strengths and limitations of reasoning models via the lens of problem complexity. Apple Machine Learning Research. (n.d.). https://machinelearning.apple.com/research/illusion-of-thinking

Walker, I., & Chopping, D. (2025, June 9). Qualcomm agrees to buy chip firm Alphawave for $2.4 billion - bloomberg. Qualcomm to Buy Alphawave IP for $2.4 Billion. https://www.wsj.com/finance/investing/qualcomm-to-buy-alphawave-ip-for-2-4-billion-f48478ed?mod=Searchresults_pos1&page=1